Computer Science Professors Reflect on Online Accountability and Disinformation

As we learn more about the insurrection at the U.S. Capitol on January 6 and the events leading up to it, the role of technology and social media in spreading false information has emerged as a central issue. We asked Eni Mustafaraj, assistant professor of computer science, and P. Takis Metaxas, professor of computer science and department chair, to reflect on online accountability and the spread of disinformation. This is the first of two parts.

To what extent was internet disinformation the spark of January’s insurrection?

P. Takis Metaxas: Disinformation (that is, misinformation with an intent to do damage) definitely had a major role in sparking the January 6 insurrection. It was the lie that the elections were stolen—a lie spread across social media platforms by former President Trump and his supporters—and the absurd claim that the party losing by 7 million votes had won, that sparked the insurrection.

Believing lies can have very bad consequences. Believing that you can be cured of COVID-19 by drinking chlorine will kill you, but having a trusted authority broadcast this lie can kill thousands of people who trust him. Believing that the losing party has won will make you a fool, but having the president broadcast the lie the elections were stolen can kill democracy.

Eni Mustafaraj: When talking about the Capitol’s storming, it is good to start with the main instigator. Donald Trump had been chanting his refrain of voter fraud and stolen election for months, if not years, via his Twitter platform. The Republican political establishment went along with it and still does. Meanwhile, like Trump and his enablers, the people who stormed the Capitol were feasting on white nationalism, the ideology that still pervades much of the political infrastructure of this country, built on the systematic, centuries-long disenfranchisement of people of color. Whose votes was Trump keen to disqualify through lawsuits or intimidation after he lost? Those of the Black voters from Atlanta, Detroit, and Philadelphia.

If we think of disinformation as an assault on truth, there have been many such prolonged assaults in the past, thriving on pages of Google and Facebook: Holocaust denialism, racism, misogyny.

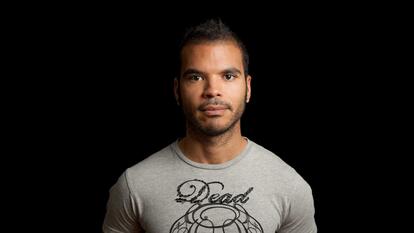

Eni Mustafaraj, assistant professor of computer science

Disinformation and propaganda are not an invention of the internet, even though the internet makes it easier (less costly) for them to spread. The internet also makes it easy for groups to organize, but that is true for all causes people care about. However, democracies have been failing and dying elsewhere in the world, without the help of the internet. Homegrown domestic terrorism is not something new in America.

What is the role of tech companies (Facebook, Google, etc.), if any, in holding people and organizations accountable for spreading disinformation?

Metaxas: The question indicates that there may be a role that private companies could have in holding individuals and organizations accountable for spreading disinformation. That means that they may have the right to decide what is disinformation. Such power would be terrific and terrifying. Can they really do it? Should they have the right to do it? I would respond negatively to both.

Tech companies could be responsible for stopping the spread of disinformation if someone could determine what disinformation is. Who would that someone be? It is acceptable to assign the responsibility of determining the truth to the courts, but the process is long and expensive. And even if it was possible, it would have to be instantaneous, because by the time a court decides, the damage is already done and it may not be easily undone. If misled people have believed a lie, they cannot easily stop believing it. It is far easier to mislead someone than to show them that they are misled.

Mustafaraj: Facebook and Google have already amassed too much power that they have wielded unfairly in the past. Their reluctance or unwillingness to address harmful disinformation has angered many, which led to increased pressure on these companies. Recently, we saw the fruits of such sustained scrutiny: Facebook banned all Myanmar military accounts in the aftermath of the recent coup, something that they refused to do a few years ago during the Rohingya genocide. Let’s not forget that it was a collective, unexamined adoration of Silicon Valley that shielded them from accountability for too long.

If we think of disinformation as an assault on truth, there have been many such prolonged assaults in the past, thriving on pages of Google and Facebook: Holocaust denialism, racism, misogyny. The list is long. The assaulted groups kept raising their voices to be heard, but the unaffected majority was not paying attention. What has changed is to whom disinformation is targeted (almost everyone), and how people are reacting to it (some by embracing it). Researchers have a name for it: “participatory disinformation.”

Policing speech that is otherwise protected in this country is an almost Sisyphean task. Moreover, Section 230 protects platforms from liability, while allowing them to moderate at their discretion. They are not publishers of disinformation in the legal sense of the word “publisher.” But they should bear responsibility for the algorithmic amplification of misinformation, which has been financially beneficial to them. As the 2020 U.S. presidential election approached, they did a lot to correct the issues of the 2016 election: No more Macedonian teenagers spreading fake news about the Pope’s endorsement or Russian fake accounts inciting divisiveness among Americans. However, no one had an easy solution about what to do with the disinformation coming from the White House.

I think it’s a mistake to believe that our current problems can somehow be solved through technology. Seventy-five million Americans voted for Trump, and a large majority of them believe the election was stolen. Should Facebook ban them all from its platform for saying that? While these new technologies have contributed to our political polarization, they are not the root causes of our current problems.